The Case for a Logarithmic Performance Metric

Traditional comparisons of benchmark results focus on percentage differences. While seemingly simple to understand and compute, such comparisons pose a number of problems that are solved by using a different basis for comparison: a logarithmic unit with the rather unfortunate name of centinepers, which we shall hereafter refer to as log points.

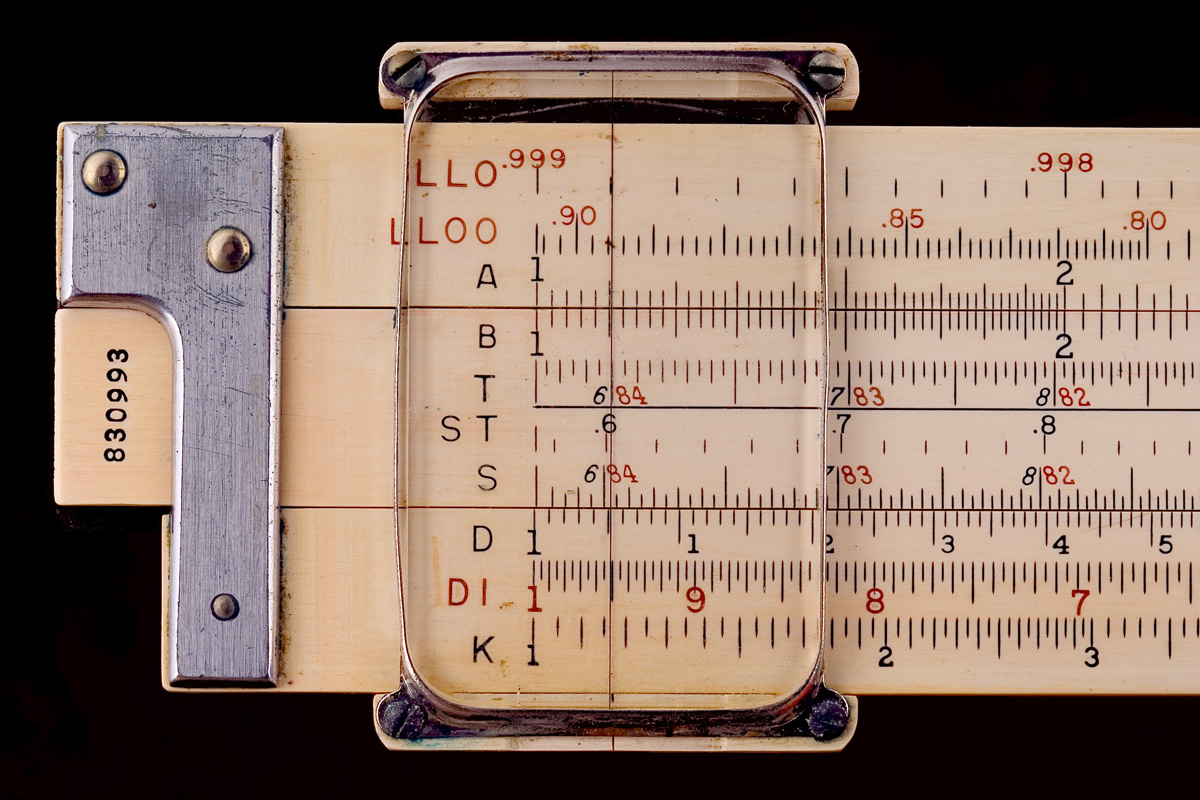

In the form of log tables and slide rules, logarithms have been used for centuries to simplify human calculations. Log points carry this advantage into scenarios with a large quantity of numbers to be compared, such as the analysis of benchmarks results for performance tuning.

A score in log points is computed as follows: if a benchmark completes x operations per second, then the score in log points is:100 · ln x

where ln is the natural logarithm function. For instance, a benchmark that completes 22,026 operations per second scores 1000 log points.

This particular logarithmic unit was chosen for its familiarity: in small quantities, a log point is equivalent to a percentage point. If two benchmark scores differ by 1%, they also differ by 1 log point. In fact, if they differ by fewer than a dozen percentage points, they differ by the same number of log points. (The two scales diverge as the difference increases because log points reflect compounding, as described below.)

For example, if a benchmark score changes from 3583 to to 3505 operations per second, what is the percentage change? It’s hard to compute mentally. In contrast, using log points, this would be a drop from 818.4 to 816.2, which is clearly a difference of 2.2 points, corresponding to a 2.2% performance drop. Log points make this difference obvious and easy to calculate.

When I write microbenchmarks, I always tend to report log points alongside the raw scores. Here are some of the reasons:

Reduced ambiguity

Whether comparing operations per time or time per operation, and whether looking at an increase or a decrease, log points give the same answer, which is not true of percentage points.

- If one benchmark run is twice as fast as another, that could be a 100% increase in operations or a 50% reduction in time, depending on whether the person reporting the results wants to make the difference look large or small.

- If a benchmark score decreases by 50%, then an increase of 100% (not 50%) is required to recover the loss.

On the other hand, all of these differences would equal about 70 log points, so there is no ambiguity. A 70-point gain is required to recover a 70-point loss.

Consistent scaling

If a benchmark measurement has a 2% margin of error, that is always 2 log points, regardless of the benchmark score. With raw scores, 2% could represent 1 point or 4000 points, depending on whether the raw score is 50 or 200,000.

Similarly, if an upgraded system is, say, 70% faster than the old one, you can use a calculator to determine that this equals 53 log points, and then add 53 log points to each benchmark score—a task that is easier to do mentally than to multiply them by 1.7.

Compounding

If two seemingly independent changes (say, a CPU clock speed boost and a compiler optimization) each earn a 25% improvement, and we use both, then we should not expect a 50% improvement, but rather a 56% improvement. That 6% difference is a kind of compounding, and is not easily seen or mentally calculated in ordinary percentages.

In contrast, those same two changes would each gain 22.3 log points, and when combined, should gain 22.3 + 22.3 = 44.6 points. If they were found to gain only 40.5 points (from a 50% improvement), the 4-point shortfall would be obvious, and could point the way to a new understanding and further improvements.

Comparability

This is the major advantage of log points.

To scan for significant patterns among a large number of raw benchmark scores requires either mental division (to compute pairwise percentage differences) or automated analysis. Mental division by large numbers is difficult and imprecise, and automated analysis is complicated by the fact that there are often many possible pairwise comparisons that could be made, so the analysis must determine what comparisons are of interest in order to avoid drowning the user in data.

Thus, either the human must do difficult arithmetic, or the computer must be taught how to provide insight. In contrast, using log points lets the computer do the arithmetic while the human provides the insight – a much more satisfactory division of labour.

For example, consider the following benchmark scores:

Each column represents a benchmark, and each row represents a configuration used to run that benchmark. For each benchmark, it can be seen that “Opt. 1” improves the score, “Opt. 2” improves it by a bit less, and with both, the scores are best of all.

Now consider the same scores presented as log points:

For benchmark A, we can now tell that “Opt. 1” improves the score by 4.4 points, “Opt. 2” by 3.5 points, and both combined give 7.7 points, all just from mental subtraction. If the opts were independent, we would have expected a combined 4.4 + 3.5 = 7.9 points, so 7.7 seems reasonable. For benchmark B, the opts gain 5.4 and 4.4 points each, and both together gain 9.6 points. It may be interesting to find out why the opts have a larger impact on B than on A. For benchmark C, the opts gain 4.4 and 3.4 points each, but both together gain only 4.9 points. The opts are not behaving independently in this case, and it may be interesting to find out why.

Thanks to log points, we were able to derive all these insights mentally, using just subtraction.

It is not impossible, of course, to determine all this from the raw scores, if one is willing to do mental division of four- or five-digit numbers to two figures of precision to compare each and every pair of scores that may be of interest. In contrast, a computer can easily calculate a large number of benchmark scores in the form of log points, leaving the human free to scan many results, doing many comparisons and searching for patterns using just mental subtraction.

Log points also look good on a chart. Adding horizontal grid lines every log point gives the chart a sense of scale, and, the magnitude of a small percentage difference is visually obvious.

For example, below is a graph of the A column from the above example:

It’s immediately obvious, for example, that the difference between Opt. 1 and Opt. 2 is about one percent.

Rules for mental math

You can relate log point scores to raw scores in your head if you start with these three rules of thumb:

- A 1% increase is about 1 log point

- A 2× increase is about 70 log points

- A 20× increase is about 300 log points

Adding log points multiplies the gains; so, for example, a gain of 370 log points equals 300+70, which gets you a 20× increase followed by a 2× increase, giving a 40× increase overall. Likewise, 230 log points equals 300-70, which gets you a 20× increase followed by a 2× decrease, giving a 10× increase overall.†

Conclusion

When running benchmarks for performance tuning, reporting the results in log points reduces ambiguity and simplifies the mental arithmetic required to compare benchmark results. Log points make it easier for people to search for patterns and anomalies among large quantities of benchmark results.

† Our estimates are off by a bit, because all of the rules above are rounded, so each one you apply can introduce a 1% error. Rule 2 introduces the most error: rather than 70 log points, the correct value would be about 69.3, which I think you’ll agree is a much more awkward number.